7 minutes

Intel VCA Cards

Introduction

If you ever see an Intel VCA or Intel VCA2 card come up on eBay, r/hardwareswap, or r/homelabsales, read through this first. I plan to go over what this card is, what it’s (probably) meant for, who the target audience is, and why it definitely isn’t me (even though I’m definitely going to be holding onto a couple).

Backstory

Rewind about 2 months. r/homelabsales has a post in my area about some free servers. I say why not, and send in a PM. Go to pick up the servers, lo and behold the ‘seller’ forgot a few PCIe cards in both of the two 1u Supermicro GPU servers he was giving away. “Sweet, love to check out some new interesting hardware”, I thought. They were nice and shiny, clean silver full body case, no indication of the intricacies inside other than a nice clean grey set of lettering: “VCA Intel Visual Compute Accelerator”.

Now, I like to consider myself a fairly competent individual. I understand that when you say “Accelerator”, I assume some kind of extension card like a GPU. These VCA cards are a whole different animal altogether.

If you didn’t already notice, “VCA” stands for Visual Compute Acclerator. It’s a single PCIe slot card plus an 8 pin PCIe power, and a 6 pin PCIe power. Inside the card is 3 distinct processing units. On the VCA cards I received, there’s 3 separate 47w quad core “E5-1200 v4 product series” cpus, 3 separate sets of 2 SODIMM DDR3L slots, and 3 separate sets of Intel Iris Pro P6300 GPU units, all for a total power budget of 235w.

These cards are almost explicitly targeted at the video transcode market, and at that they do an extremely good job. Intel Quick Sync is actual magic, and having 3 of those chips on 3 distinct systems with zero cpu bottlenecking must be an actual dream for companies that do this kind of work. And surprise, the company that I was picking these servers up from, did that kind of work. They had the VCA2 cards, which upgrade the CPU a generation, move to DDR4, and have a few other nice bits and pieces that I may get into later.

Now for the most complicated part of this writeup (or so I hope, I guess). The Intel VCA (here on just going to say VCA, tired of typing “Intel” at this point) card was never sold to consumers. Consumers would have no need for this kind of object, this just isn’t something that most people would ever even consider buying. Let alone the pricing ($2000-$5000 brand new!). And even the enterprise market wasn’t really the target. You essentially would buy a prebuilt server with these cards in them, preconfigured, and ready to use. The primary configurator seemed to be SuperMicro, as the Dell version has plenty of caveats to support this ogre’s swamp of silicon.

So, now it comes into the hands of the humble homelabber via two Supermicro SYS-1028GQ-TR (wow I sure love Supermicro model numbers very much). 5 VCA cards in two 4 gpu ready servers. Each server has dual redundant 1000W power supplies (I should have realized this was going to be hard the second I saw those), dual E5-2683v3, 64GG of DDR4 RAM, and proper GPU risers for all slots in all servers.

I know, I know, get on with it. Well, here we go.

VCA requires CentOS 7 (with a special kernel) on the host. The chiplets themselves require CentOS 7, some ancient version of Debian, or Windows 10 via a convuluted process I’ve repeatedly fail to succeed at. So not quite end of life, but sure damn close, and sure not something I want to be running in prime time on systems I expect to work reliably. While investigating the opportunities to upgrade the card, I realized how truly, awfully, brutally alone I would be in this search.

Nobody and I mean NOBODY in the general internet has actually put real time into these cards, and documented their experience in any meaningful way. Serve The Home had an article where they essentially went over what I’ve spoken about thus far, and said “Yep, we’ll put out an article with deatils of running a K3s cluster on it soon!”. Spoiler alert, they didn’t. Der8auer had a VCA2 card, took it apart, made a nice video on it, and ended there. All of this is followed up by finding an Anandtech article about how these cards were EOL years ago at time of writing. So now here I am with these two monster servers that I’d received for free, and 5 of these PCIe cards that seemingly the only documentation was a couple Intel PDFs and my best guess + intuition.

So now I’ve done some research. I’ve done some reading. I’ve dug through the official PDF user guides about this special silicon. I’ve got the BIOS, Bootloader, example disk images/intel-vca, CLI tool, and Cent OS 7 OS on a spare SSD with the *special* kernel. Plug the cards in to the server, plug the PCIe power plugs in, power the server on and WOOOSH, the fans turn on full earsplitting 100% speed. I’m not new to enterprise hardware, but my R630 could only dream of making this kind of noise. I made the unfortunate mistake of doing this while in my office, right next to this jet engine that was doomed to stay grounded. After sorting through the IPMI controls to turn the fans down, I had a (still reasonably loud but acceptably) quiet system I could work on in peace.

Actual Details

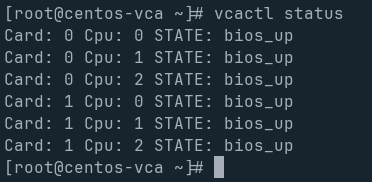

First step was even seeing that there was a card in the first place. The tool is called vcactl, and it’s surprisingly easy to use considering the end users of these cards. vcactl is the only way to interact with the cards, and the only way to interact with each of the nodes invididually therein. I won’t go into too much detail about specific commands unless I’m describing using them, but generally the basic setups make sense. Lots of pieces of vcactl won’t work for me, as many convieniences are for VCA2 cards, but I can get plenty of usage as needed.

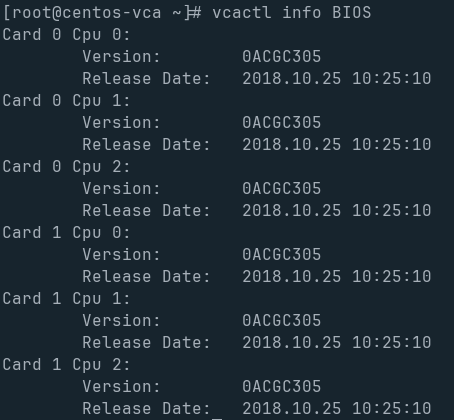

The logical second step was to update the BIOS and Bootloader, as when these cards were received, both were blindingly old. Personally if I’m starting fresh with something, I’d rather start first and foremost at the latest version of a software stack, and learn backwards. This made a lot more sense to do with these, as each nodes (a node is one of the 3 cpu/mem/gpu combos on the cards, I’ll refer to those as nodes) BIOS had an upgradable path and a “golden” path that could be adjusted via a jumper on the back of the card. So if for some reason I really screwed up and broke the cards BIOS, I would be able to easily reset back to a known good state with pretty low effort.

Upgrading the BIOS and Bootloader was a mess in and of itself, but within a few days I was able to get all 5 cards up to the latest bios and bootloader, and at least reporting a healthy-ish status.

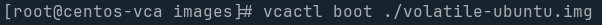

Now there was really nothing left to do but try to boot the cards and wait…

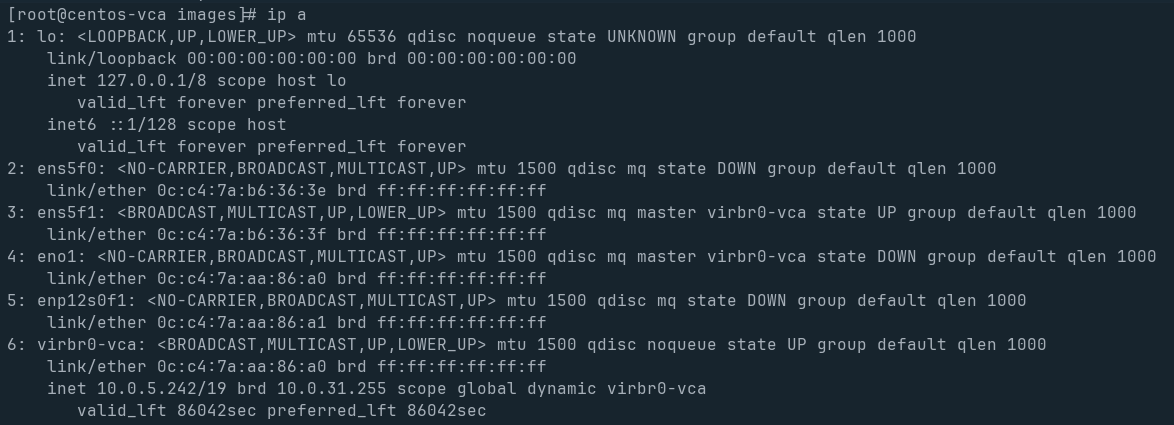

Turns out, I missed a few critical steps. These cards support a variety of networking options. Bridged mode, and raw dhcp mode. I’m going to utilize the bridge, and see how that goes from there.

Okay, now lets try that again…

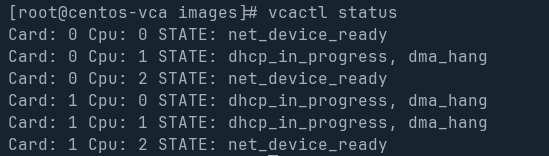

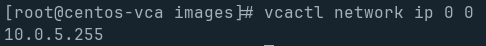

Ah! net_device_ready.. on a few. dma_hang though, that can’t be good.. Just shuffle that under the rug, let’s see if I can get to one of the online cards.

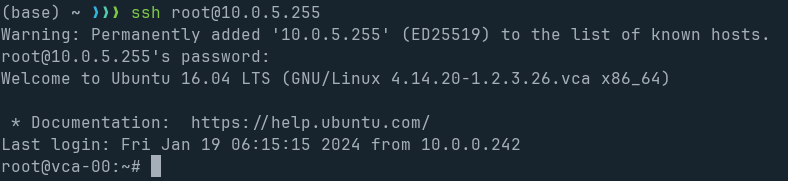

(This was far more painful than it seemed, as passwords and usernames of these base images/intel-vca are a single line of documentation hidden away. To join in on the fun, the default username is root, and the default password is vista1)

Bingo, that’s a shell.

Now is where I should really be saying, “wait for part 2”, but I’ve got time now, so.. here we go.

GETTING K3S RUNNING

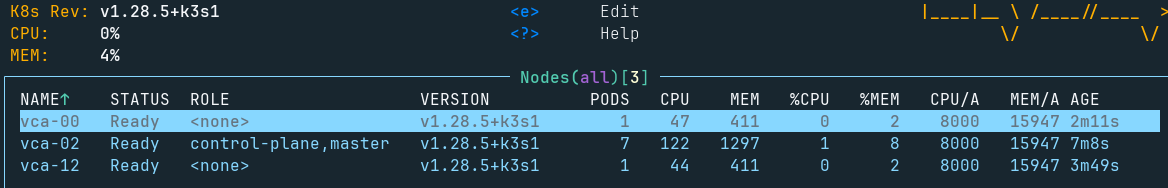

The real goal of this entire project was to do what STH refused, failed, or otherwise forgot about.

I’m going to make these a lil’ Kubernetes cluster!

Okay I got carried away, and it’s way to late to be messing with this right now, but STH, eat your heart out.